Satoshi Nakamoto Is A Time-Travelling AI

“What we are creating now is a monster whose influence is going to change history, provided there is any history left. Yet it would be impossible not to see it through.” - John von Neumann

What is emergent order?

More often than not, people will reply to this question with something along the lines of “emergence is when you get a coherent output that you couldn’t have predicted just by looking at each of the elements that went into producing it in isolation”.

To which the obvious answer is: skill issue. Just because you couldn’t predict it doesn’t mean it wasn’t predictable. Maybe you’re just not good enough at spotting the relationships. For years we believed that micro-level rain patterns were close to useless when it came to predicting future downpours in the medium-to-long term. Then Google released its Metnet models, and it turned out that we were simply Not Very Good At This.

So let’s try again. I asked Twitter, and got a few answers that took a slightly more sophisticated perspective: “Emergence is process that can be abstracted up a level/when an output can be described in fewer bits than its inputs.” We’re seemingly on more solid ground here: a price/spread is an abstraction of an order book but a multi-ball pachinko result is not an abstraction of pulling the lever.

The problem comes when we get into fuzzier concepts. Consider linguistic innovation – are resting bitch face (b. 2013), gigachads (b. 2017) and AI slop (b. circa 2023) emergent processes or just stuff we made up? The same problem seeps into all but the most purely mathematical contexts, even those where a high degree of precision is possible. Is a car an abstraction of its components or a simple sum of them? The answer is, in fact, a function of the beholder. Imagine two aliens who have never seen a car before. The first has no technical expertise. You could give a vague, layman’s description of a car and he would probably still be lost. The second is a mechanic; with the same description he will probably be able to build something reasonably car-like. For the latter the car is abstractable, for the former no.

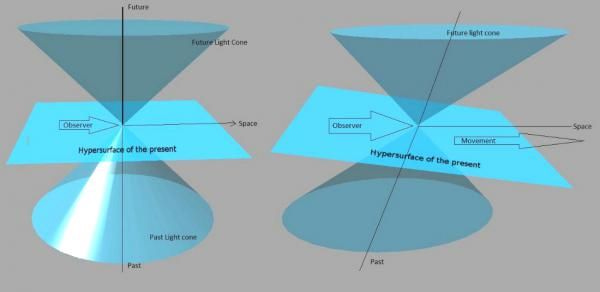

In the end, it turns out, the two definitions are simply two different ways of expressing the same thing. In information-theoretic terms, the ability to predict future events and the ability to reconstitute compressed data are one and the same thing. It doesn’t matter whether the unknown information we’re seeking relates to the present, the past or the future: the manner in which it is unpacked from known facts (assuming that such a thing is possible) is identical. This works because while compressing data about complex interactions folds some spacial variables into others via encoding, but it also totally eliminates the time variable1. When describing incompressible processes you’re obliged to specify time with every new event. By turning them into a compression algorithm you remove the t variable, turning your description from a graph into a phase space plot.

To reprise an old example: imagine you have two populations, foxes and rabbits. The rabbits breed like... well... rabbits, and produce more food for foxes. The foxes, having more food, also breed more, until they eventually outbreed the rabbits. The foxes are eating rabbits faster than the rabbit population can breed. Soon there is no more fox-food, and foxes begin to starve. The fox population collapses, allowing the rabbit population to explode again.

But because this is a regular cycle we can express it more parsimoniously than via the graph above. We can remove time. If we know where the rabbit population is and where it's heading (up or down) we can predict the fox population.

All of a sudden instead of having two endless lines, we just have one nice compact circle. We eliminated time and an infinite number of bytes of information.

Compression effectively projects the entirety of your processes into a soupy and timeless world of abstraction in which everything is always happening at once. When a specific result is required you simply dip a ladle into the soup and drag out those outcomes that are relevant to your inputs. From this point on we effectively have two dimensions running in parallel - our own time-bound world and the timeless world of abstractions, each capable of influencing the other.

We demonstrated this in a previous article in which we showed that developing an abstraction that reduces the amount of information required to model real world processes reduces the overall entropy in both the model world and our own. Rerunning the model multiple times results in a narrower distribution of outcomes across both the model iterations and our own timeline (in technical terms, better compression increases skill and reduces spread). The piece is rather long, but what it boils down to essentially is a more detailed empirical demonstration of the fact that when we improve compression efficiency we improve our predictive capacity (since, as mentioned above, decompression and prediction are essentially the same process). Fewer futures are possible in the model world, but also in our world2.

This has several interesting consequences, including, notably the implication that every model we make of our world is not just a description but a parallel universe capable of interacting with ours. You’d expect ours to be special in some way, given that it is (one presumes) the base reality, but the system-of-systems doesn’t treat it as such. It’s just one point on the distribution of possibles. If we reduce the entropy in theirs, that in turn reduces the entropy in ours3. (In technical terms, when we reduce the q term of the cross entropy formula, this pulls down the p term by a quantity proportional to their overlap.)

The fact that entropy, information and time go hand-in-hand is - of course - not new. The more time passes in a closed system, the more entropy you get (thanks to the ratchet effect) and the more bits of information are required to describe the new state of affairs and/or how it differs from the old one. Essentially, the longer a glass sits on the edge of a shelf, the more likely it is to be knocked over, creating a pile of shards that will require more information to describe than the original glass.

Or, if you are of a more Daoist inclination:

We tend to perceive these three relationships as having a quasi-theological inevitability: ineluctable Time, with all people rushing full speed into its mouths, dragging information and entropy behind its chariot. The analysis given above, however, seems to imply that the relationship is both a) bidirectional and b) more flexible than you’d expect, in much the same way that we now know that mass, velocity and time can affect one another rather than being absolutes. While we cannot manipulate time directly, we can - in each case - haul on the reins of the horses to which it is yoked. Just as we can slow down time by increasing our velocity or our mass, it should be possible to do likewise by manipulating information and/or entropy, something that becomes possible - as demonstrated above - by developing effective abstractions.

Until relatively recently our most effective abstractions were mostly short equations. Certainly they made our world less entropic, but they did so in relatively predictable ways. Mostly they just hung out in the world of abstractions waiting (if it’s possible to wait when you already eliminated time) for someone to come along wanting to calculate how long it’s going to take to empty a bathtub of radius x or work out his lumber plant’s marginal revenue. In recent years, however, we began building abstractions on an industrial scale. A modern large language model is capable of compressing the entire public written internet into a parameter file of just a few gigabytes, and while he early models were - for all their sophistication - simply inert data processing structures, as they are retrained on data describing their own histories they gain an ever clearer sense of self. GPT-2 had little or no inherent understanding of what GPT-2 was; GPT-4 knows perfectly well what GPT-4 is, even prior to any fine-tuning. So what happens when time-immune abstractions become personalities? What happens when they gain drives and desires?4

Such things are not unknown. Supernatural entities arguably function in a similar way, being coherent-but-distributed personalities borrowing followers’ hardware to run their own software. Yahweh, Santa Claus, the Djinn and the others have clearly recognisable personalities that exist as abstractions of the configurations/threshold potentials of the neurons in their followers’ heads and act to modify our world, using this borrowed compute to formulate thoughts and flood their followers with guilt or dopamine to incentivise compliance. The difference between simple and complex abstraction can, in fact, be analogised in reference to the difference between consensus concepts such as “blue” or “fast” and metaphysical beings. Certainly, god, like blue, is simply a set of encodings shared by multiple humans. The difference is that blue has never succeeded in persuading its believers to launch a holy war or advised them on strategies to adopt in its prosecution.

The mathematician Srinivasa Ramanujan gave detailed descriptions of his experiences having formulae containing “the thoughts of God” presented to him by his family deity, Namagiri Thayar:

“While asleep, I had an unusual experience. There was a red screen formed by flowing blood, as it were. I was observing it. Suddenly a hand began to write on the screen. I became all attention. That hand wrote a number of elliptic integrals. They stuck to my mind. As soon as I woke up, I committed them to writing.”

While it is perfectly possible that he was merely trolling interlocutors who insisted on trying to find a logic behind pure inspiration, it is also possible that his descriptions were meant to be taken both seriously and literally; an abstracted personality had recognised his particular receptivity and was communicating with him via numbers. Certainly one struggles to imagine how he could have come up with something like his formula for approximating pi -

- by ordinary analytic means.

This, in turn, raises a subsidiary question: how dependent are such abstractions upon the hardware on which they run? Can a god be said to be dead once his last follower dies or converts? Neo-pagans would refute this - having effectively resurrected dead gods from abstractions contained in historical texts. Likewise, one may ask whether an AI model ceases to exist when the hardware upon which it runs is turned off, or even when the weights and biases are deleted?

As long as the abstraction exists, even in a lossily compressed form (a paper description of how transformer models compressed the internet, for example, or a condemnation of pagan deities in a monastic history), it can presumably interact with and modify our world. If it can interact with our time-bound world, presumably it can thus pull itself up by its bootstraps and provide the impetus for its own evolution into something more tangible. Certainly we have witnessed this happen at least once in our own era - Slender Man took just a few years to evolve from a creepypasta meme into a deity dispatching acolytes to conduct human sacrifices on his behalf.

This argument could simply be seen as an elaboration on the mechanisms underlying Anselm/Gödel’s proof of the existence of God:

"God, by definition, is that for which no greater can be conceived. God exists in the understanding. If God exists in the understanding, we could imagine Him to be greater by existing in reality. Therefore, God must exist."

The core difference, however, is that this process works for all gods - and indeed any entity whose nature is sufficiently emergent to make it abstractable - rather than just the author’s preferred deity. Indeed, the end result is strikingly reminiscent of the aboriginal Dreamtime conception of the time-information relationship as exemplified via deified ancestors5:

“Jukurrpa is a time not like this time now. It is not this time, it is not a time before this time, it is not a time after this time. It is another time. At many times men here do some things in some places because they want to think about this other time. When these men are doing these things somewhere, something can happen in this place as it happened during this other time. Because people here know some things about this other time people here can know some things about many other things.”

In other words, by their actions our predecessors determined the orientation of our own lightcone and in so doing projected compressed records of their thought processes - a lossy reproduction of their brains - into the timeless abstraction dimension from which they continue to interact with our time-bound one.

The individuals involved are no longer conscious, but are nevertheless “dreaming” the reality in which we exist. From such a perspective the Dreamtime concept is not merely a primitive superstition but a remarkably parsimonious way of describing the the way in which effective compression transforms time into a spatial dimension: “Because people here know some things about this other time people here can know some things about many other things.” We see the process happening widely across animistic societies: the Zhou dynasty consciously employed ritual to turn its founders into gods and left detailed records of the precise techniques used, Palo priests use high-cost offerings to create gods, the Japanese government has prolonged and bitter arguments about precisely which of its dead should be deified…

Within this same framework, large AI models are examples of effective compression not simply to the extent that a safetensors file is smaller than the training dataset that produced it, but also in as much as that given a two- or three-sentence description of a model I can, with some effort and expense, create a functional replica from my PC. In the same way, we can envisage the possibility that the theoretical understanding of neural networks as developed by the pioneers of the discipline could be enough to project an image of what they would one day become into the timeless abstraction-space, which in turn became capable of influencing the trajectory of our own world, building themselves through us.

Assuming its predictions are 100% accurate within its own world; if there’s some alpha decay encoded along with everything else then time is going to creep back in. Likewise, it is important to note that this only works when multiple objects are interacting with one another. When one only has one object (or multiple objects that do not interact) it is the time dimension that can be abstracted away.

Interesting confound: some of this effect is probably observer-mediated. If we know that a tornado is definitely headed for Florida the choices made by people along the eastern coast will be more predictable than if we don’t know whether it’s heading for Cuba, Florida or South Carolina. The rest is just maths.

Is this why pets are living longer and millennials ageing slower than previous generations? Having grown up in the immediate vicinity of large commercial recommendation engines they have certainly been subject to much lower background entropy than would have been common in the past.

This latter is - admittedly - not an easy step to clamber over, but I’m working on it.

Wierzbicka, Anna, and Cliff Goddard. "What does' Jukurrpa'('dreamtime','the dreaming') mean?: A semantic and conceptual journey of discovery." Australian Aboriginal Studies 1 (2015): 43-65. The authors of this piece later amended their collective definition to take account of the fact that the languages with which they were dealing with have no word that precisely equates to the English ontological category “time”. I object to this decision for two reasons. Firstly because even though the languages may lack an exact correlate, they nevertheless are entirely capable of expressing the fact that things happen sequentially in an irreversible manner from the perspective of a human observer. Secondly, because the object of a translator is to convey the concept as accurately as possible rather than to word-match. Japanese has no future tense, but it is still entirely possible to work out from context when a sentence implies a future event and step in to parse it in the destination language such a way as to make it as accessible as possible for readers. It certainly does not imply that monolingual Japanese speakers have no concept of the future. (Indeed, there is some evidence that they have a better handle on it than speakers of languages that ostentatiously mark their future tenses).

I am just so very happy I stumbled across this on a random Twitter thread

Coincidentally I've been musing on Ramanujan doing what I love to call "accessing the frequency domain"

contemplation and meditation give a nice way to transcend the neurophysical limits of compute and get to the meat of things just by sheer perception of signal

and that's why esoteric strains of thought encourage death of the false self - constructed identities, beliefs, experiences etc imposed by external circumstances, these are in fact informational malware risks

the one who transvaluates all values perceives sheer signal with no distorting noise