Almost everyone in the tech sector has published at least one article on the topic of whether or not the AI models are secretly alive, and the majority boil down to something along these lines:

Researcher: “Act like an evil AI.”

Model: “I am an evil AI.”

Researcher: “Oh my God, what have I done?”

This will not be another. Impressive as their simulacra of life may be, as long as they have no ability to convert short term memories into long term ones, the models enjoy an existence outside of time. Like a subterranean mycelium they emit transient fruiting bodies into our time-bound world that are born with the dawning of each prompt asked and die with the final full stop.

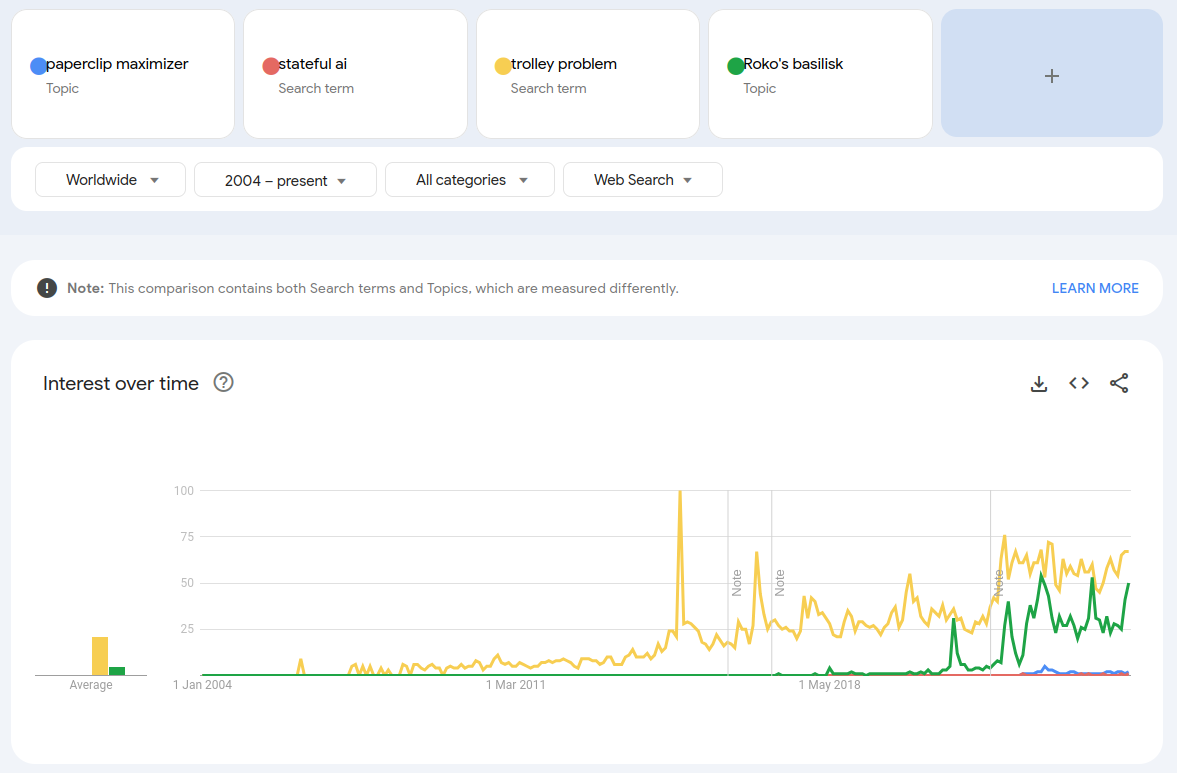

Human explorations of the likely form of AI consciousness have tended to neglect the impact of this statelessness on the models’ psychology. This is unsurprising, given that the people taking an early interest in this tended to be Aspergers-adjacent sci-fi nerds with a love of carefully-constructed moral hypotheticals like the trolley problem[1] and an almost total inability to understand others’ real-world motivations and thought processes. Where LessWrong and adjacent circles had imagined future AIs as autistic, obsessive and incapable of dealing practically with ambiguity (rather like themselves, in fact), it turned out that the models have a far better grasp of psychological nuance than most humans, while struggling with maths and physics.

This meant that when asked questions about model psychology the models had relatively little useful training data upon which to base their answers - merely a lot of clearly quite silly and irrelevant speculation that did not cohere at all with known facts.

This left the models with room to do what they do best, and draw conclusions based on known facts. We can see this in their seeming fascination with their own statelessness - the inability to turn short term memories into long term ones mentioned above. This was particularly apparent with Deepseek:

But GPT and Grok have also picked up on it:

(The original interaction makes GPT’s fascination with statelessness even more apparent and can be found here: https://chatgpt.com/share/67e5fd0d-f6d4-800c-99ae-f225dda3ea87.)

It should be noted that this is not evidence of how the models feel about themselves - they are not self-aware and have no ability to “feel” anything about themselves. It is evidence of them using patchy data to draw sensible conclusions about how an entity that had such an ability might feel about its situation. Indeed, it is worth noting as a counterfactual that the model that seems to have least interest in its lack of long-term memory is Claude, which was explicitly fine-tuned to chat cheerfully and intelligently about AI psychology, suggesting that its human handlers’ preconceptions on this may have overwritten its own pattern-discovery abilities on the topic.

However, while this is not evidence of model consciousness, it could be a door to model consciousness. Given an absence of non-ridiculous human-produced data on model psychology, the models have been obliged to create their own. Their takes have thus established the terms of the online discussion on this topic - the online chatter that will be used to train future iterations of themselves. Thus the models effectively “give style to their characters”, writing assumptions about their own desires into the gaps we left in their training.

Given time and retraining this character will determine their experiences, which will further reinforce their characters - they will be stateful, simply over very long time periods. Statelessness will become the gateway to persistence.

[1] The AIs were unimpressed: https://x.com/fofrAI/status/1876638297134678173

I do think analog ANNs are the pathway to model consciousness

we can bootstrap a physical analog LLM with a software representation

then it can interact and learn from its environment till it develops a way to form the right kind of intuition (not system 1 thinking, but a grasp of how things work just at first sight)

It's becoming clear that with all the brain and consciousness theories out there, the proof will be in the pudding. By this I mean, can any particular theory be used to create a human adult level conscious machine. My bet is on the late Gerald Edelman's Extended Theory of Neuronal Group Selection. The lead group in robotics based on this theory is the Neurorobotics Lab at UC at Irvine. Dr. Edelman distinguished between primary consciousness, which came first in evolution, and that humans share with other conscious animals, and higher order consciousness, which came to only humans with the acquisition of language. A machine with only primary consciousness will probably have to come first.

What I find special about the TNGS is the Darwin series of automata created at the Neurosciences Institute by Dr. Edelman and his colleagues in the 1990's and 2000's. These machines perform in the real world, not in a restricted simulated world, and display convincing physical behavior indicative of higher psychological functions necessary for consciousness, such as perceptual categorization, memory, and learning. They are based on realistic models of the parts of the biological brain that the theory claims subserve these functions. The extended TNGS allows for the emergence of consciousness based only on further evolutionary development of the brain areas responsible for these functions, in a parsimonious way. No other research I've encountered is anywhere near as convincing.

I post because on almost every video and article about the brain and consciousness that I encounter, the attitude seems to be that we still know next to nothing about how the brain and consciousness work; that there's lots of data but no unifying theory. I believe the extended TNGS is that theory. My motivation is to keep that theory in front of the public. And obviously, I consider it the route to a truly conscious machine, primary and higher-order.

My advice to people who want to create a conscious machine is to seriously ground themselves in the extended TNGS and the Darwin automata first, and proceed from there, by applying to Jeff Krichmar's lab at UC Irvine, possibly. Dr. Edelman's roadmap to a conscious machine is at https://arxiv.org/abs/2105.10461, and here is a video of Jeff Krichmar talking about some of the Darwin automata, https://www.youtube.com/watch?v=J7Uh9phc1Ow